TL;DR:

Vendors are slapping "AI-powered" on everything to get more attention (and possibly investments)

What AI actually does in cloud security right now:

Summarization: Creates summaries of security findings with additional context like resources, tags, logs

Tailored Remediation: Gives exact commands and IaC code to fix security issues

AI Chatbots: Helps answer questions about findings and documentation

Rephrasing Findings: Changes how issues are explained based on who's reading (engineers, business folks, compliance team)

Natural Language Features: Turns language into automation tasks, security queries, and security policies

Bottom line: AI in cloud security is not matching up to the hype in the market right now - it's just making security tools easier to use instead of doing anything super technical or groundbreaking (though very few exceptions exist)

I attended a security conference recently. One thing stood out - from CISOs to CXOs to vendors in the stalls.

AI. AI. Fricking AI.

Quantum security is picking up. Zero Trust is still present, as usual. But right now, the buzz is clearly AI.

I heard all sorts of statements from the speakers.

"Attackers are already using AI."

"Defenders must use AI."

“The only way to fight AI is with AI.”

Also, I can’t help but notice the subtle endorsement of the vendors’ Gen-V AI-Powered Hyperscale Cloud Security Solutions.

AI Powered. AI Driven. AI Infused. Whatever.

I’m not skeptical of new technology when I encounter it. But I’m wary of the people endorsing it (and possibly their intentions behind it). I double-check whether they are telling the truth, partial truths, or outright bluffing.

So, after the conference, I dedicated my weekend to seeing how companies (vendors and customers) use this new AI technology and whether AI in cloud security is really a game-changer that lives up to the hype.

What's AI?

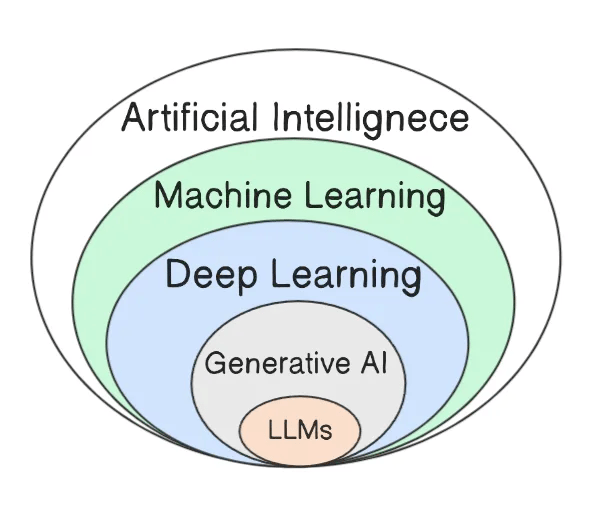

For beginners, let’s start with Artificial Intelligence (AI).

AI is a broader term for any computer system designed to mimic human intelligence.

Machine Learning (ML) is a specific AI approach in which systems learn from data rather than following explicit rules.

Generative AI (GenAI) is a broader category (within AI) that refers to any AI capable of generating new content. While Large Language Models (LLMs) can be used to create text-based GenAI applications, GenAI also includes image generation, music/audio creation, and other forms of content generation that don't necessarily use LLM technology.

Now, if you label LLM, GenAI, and ML as AI, you can label many existing cloud security tools and services as AI-powered (without changing a single line of code).

Amazon GuardDuty has been an AI-powered service for a few years now (even though its AI capabilities have some shortcomings).

Cloudflare has been using AI to defend against bots for a few years.

Microsoft Sentinel took the initiative to democratize AI for Security Operations in 2022 (even before this AI hype).

Note: I will use the terms “AI,” “GenAI,” and “LLMs” interchangeably for the rest of this blog post. I’m not talking about any ML models in this blog.

AI in Cloud Security

Vendors are at the forefront of innovation when it comes to using AI in cloud security. (Partly because they can raise more eyebrows and drive more investments).

Reading public blog posts and examining the features of cloud security tools can give anyone an idea of how AI is used and what is working.

Just because an AI feature is present doesn’t mean every customer uses it and finds value. However, it does show that AI might simplify specific tedious tasks and solve some problems.

This blog post summarizes how AI is powering cloud security right now.

Note: I have added links to certain Cloud Security vendors (CNAPP & SIEM). These are strictly for reference, and I am not endorsing anyone in this post.

Summarization

LLMs are good at summarizing large texts. For them, the description of security findings is no different - just a set of words waiting to be summarized.

Security tools (with the help of LLMs) can create summaries with additional contexts (such as the resource's name, tags, logs, etc) instead of a generic description stating, "The following assets are vulnerable to XYZ.”

The summarization is not helpful for more straightforward checks. An unencrypted database is just an unencrypted database, no matter how you summarize it.

However, the summarization feature is a bit useful when dealing with complex findings like:

multiple low-severity findings chained to form higher-severity ones

issues across multiple cloud and identity platforms synergizing to create misconfigurations

anomalies detected using logs from multiple sources (endpoints, applications, etc.)

The summary relates different issues and gives a high-level overview of the findings.

Tailored Remediation

Just like summarization includes your asset & findings data as part of the output, LLM-generated remediation steps give you exact instructions for remediating misconfigurations.

There are no more placeholders.

If your S3 bucket is misconfigured, AI provides the exact AWS CLI commands to secure it, along with prefilled information like the bucket name and region.

A seemingly significant advantage is when the remediation needs changes in IaC repos - Cloudformation, Terraform, etc. AI-generated code might save time for users (especially developers) as they can copy and paste the remediation steps.

Can IaC scanners help with remediation instead of relying on AI-generated code? I think it can be.

Wiz seems to have improved this use case a bit. Wiz now dynamically suggests the best remediation strategy based on the risks identified. Suppose there’s a toxic combination (multiple low-severity bugs synergizing to form higher-severity attack paths). In that case, Wiz seems to use AI to strategize the best and minimal remediation you can do to break the combination.

Another interesting example is Orca’s IAM Policy Optimizer. This feature uses AI to find the best possible remediation for overprivileged roles while considering security and long-term operability.

Orca’s IAM Policy Optimizer.

Source: https://orca.security/resources/blog/multi-cloud-support-iam-policy-optimizer/

AI Chatbots

Almost every Cloud Security vendor has a chatbot integrated now:

In simple terms, these chatbots integrate the natural language processing capabilities of LLMs (like Claude) and the context of your cloud (assets, findings, logs, misconfigurations, etc).

If you want to know more about a finding, you can ask the chatbot directly (instead of copying the finding to ChatGPT/ClaudeAI). These chatbots reply to your queries just like a knowledgeable peer would.

Example chatbot queries:

Why does this finding matter?

What’s the best way to fix this?

What policies do you support (based on the tool’s documentation)?

Explain about baselines and how to configure CIS baselines.

What’s the current compliance status of XYZ Kubernetes cluster

Note: Every vendor’s chatbot can have different capabilities. Some chatbots might only access the tool’s documentation and the current findings. You can keep chatting with it and get disappointed to find the chatbot doesn’t know anything outside what’s already in the dashboard. But hey!, having just a dumb chatbot will still make a security product AI-powered. 🤑💰

Integrating an AI Chatbot has another advantage for cloud security tools with complex UIs.

You don’t have to click the third option on the left navigation bar, scroll down, and make a few more clicks to understand your Kubernetes cluster's compliance score.

You can just ask an AI Chatbot about it without navigation (saving you some time and brain cells). 🤓

Rephrasing Findings

Every team speaks differently.

You can’t just send a finding about an overprivileged IAM role to different teams and expect them to understand and prioritize the fix.

With Engineering, you highlight the principle of least privilege and lateral movement if compromised.

With Business, you highlight how exploiting it leads to data breaches and associated reputation damage and fines.

With Risk & Compliance, you highlight how it might cause an audit finding or compliance risk.

The rephrasing feature (mostly part of AI Chatbots) helps with it. Depending on the person and their team, the security tool can rephrase the findings to help them better understand.

This feature's biggest bet (according to CNAPP providers) is that it democratizes CloudSec tools. Security teams should not use these tools in silos but rather share them with other teams that prioritize and remediate the findings. Different teams can then understand the issues & take action on them - making “Security is Everyone’s Responsibility” come true.

Natural Language to Automation

When security vendors combine the chatbot with AI agents, something interesting happens.

AI agents can understand what you want from your natural language input and act on your behalf when required (e.g., invoking API, changing configuration, deploying tools, etc.).

Scheduling weekly security scans doesn’t require you to click in multiple places.

Tell the chatbot to do it.

You don't need to look up the cron pattern to send an executive report on the current state of compliance on the first day of every month.

Tell the chatbot; it will find it and set up the automation for you.

Again, AI saves time used for navigation & clickops.

Does it prevent the creation of the same automation multiple times? Can it delete existing automation and workflows, causing more harm than it can help?

I don’t know yet. It’s very vendor’s chatbot specific.

Natural Language to Query

Every CNAPP vendor provides a proprietary query language or interface to query your cloud asset inventory. SIEM vendors are infamous for their proprietary query language to query logs.

Proprietary stuff means you, as a security engineer or analyst, must learn how to use it. There’s a learning curve involved.

You need to relearn every time you switch to a different org using a different tool.

With AI integrated into the product, that’s a bit easy. You tell it what you want, and it creates the query for you and loads it into the security tool.

I say it’s a “bit easy” and not “a problem solved by AI.”

AI works well when creating simple queries, but with some complexity involved, it fails. Until AI solves this problem, you should learn the proprietary languages and ask the chatbot whenever you have a doubt (and hope it doesn’t hallucinate).

Natural Language to Code & Policy Generation

It is similar to Natural Language to Query conversion but for tools outside the CNAPP or SIEM.

ARMO uses GPT-3 to convert natural language to Rego-based OPA policies.

Natural Language to Rego rule generation using GPT-3

Source: https://www.armosec.io/blog/armo-chatgpt-create-custom-controls-faster/

So, What's AI-powered, again?

Many security vendors have just integrated some sort of LLM and call their tools “AI Powered.” Their AI capabilities differ vastly from vendor to vendor.

As you have seen, the AI LLM (and possibly underlying AI agents) in cloud security products are not doing anything groundbreaking (at least on the technical side).

It's not automatically detecting more issues than non-AI counterparts.

It’s not detecting additional assets or attack patterns (just summarizing what’s already detected.)

It’s not matching up to the hype in the market right now.

At maximum, AI is currently:

Improving the UX and simplifying the UI of the security tool (you don’t have to do clickops or search for the right option in the dropdown menu; just ask Chatbot to do it)

Summarizing and paraphrasing the findings (same issue but different summary if you’re part of engineering or business)

Lowering the barrier for non-security folks to use cloud security tools (just ask the chatbot for documentation, convert your sentence to query, ask for a custom Rego policy, etc.)

Speaking on the technical front, examples like natural language to policy and automation generation are good but not good enough (yet).

Also, these natural-language-to-policy examples are intermittent events. I don’t think I (or others) will use this feature every day in a way that allows me to confidently say AI has saved me a substantial amount of time and effort (without learning the policy/query language).

I would love to see more features tackling recurring problems (like Orca’s IAM Policy Optimizer) from other security vendors.

Note: Vendors also portray AI as capable of analyzing low-severity findings and prioritizing them, essentially replacing helping SOC teams. I can’t comment on it now, but I will probably contact practitioners using AI features to understand their thoughts and opinions.

I didn’t mention hallucinations and the impact of faulty AI-generated summaries or remediation steps. Maybe that’s a topic for another day.

Moar AI, Moar Questions

The more I read about AI power in Cloud Security products, the more questions I have. Here are a few I have in mind now:

1. A good number of AI-powered CloudSec vendors also have AI-SPM capabilities. Are they dogfooding their AI-SPM capabilities to secure their AI features?

2. Can AI take costly actions on your behalf and generate a bill? Something like Self-Denial of Wallet?

3. If AI greatly simplifies the UX of CloudSec tools and reduces the bar so anyone can manage them, do Cloud Security vendor certifications (like PCCSE) hold value? Will they even be required in the future?

4. What’s the impact of hallucinations when chatbots reply with logs? What if the remediation isn’t a remediation, or if it creates another misconfiguration?

5. What do access control and logging look like? Can an engineer with limited access get findings and information from different accounts using Chatbots? If the engineer uses AI to create automation in the security tool, how does it look in the logs?

If you know answers to any of the above, please reach out to me on LinkedIn or email [email protected]

P.S. I'm currently taking on select freelance projects related to securing AWS environments. If you or someone you know needs help securing cloud environments, I'd love to chat! Just reply to this email or reach me at [email protected].